There is a new preprint that was posted recently in the PsyArXiv that may (or even should) be of interest: Redefine statistical significance by Benjamin al.

One sentence summary from the authors:

We propose to change the default P-value threshold for statistical significance for claims of new discoveries from 0.05 to 0.005.

First page or so:

The lack of reproducibility of scientific studies has caused growing concern over the credibility of claims of new discoveries based on “statistically significant” findings. There has been much progress toward documenting and addressing several causes of this lack of reproducibility (e.g., multiple testing, P-hacking, publication bias, and under- powered studies). However, we believe that a leading cause of non-reproducibility has not yet been adequately addressed: Statistical standards of evidence for claiming new discoveries in many fields of science are simply too low. Associating “statistically significant” findings with P < 0.05 results in a high rate of false positives even in the absence of other experimental, procedural and reporting problems.

For fields where the threshold for defining statistical significance for new discoveries is < 0.05, we propose a change to < 0.005. This simple step would immediately improve the reproducibility of scientific research in many fields. Results that would currently be called “significant” but do not meet the new threshold should instead be called “suggestive.” While statisticians have known the relative weakness of using

≈ 0.05 as a threshold for discovery and the proposal to lower it to 0.005 is not new (1, 2), a critical mass of researchers now endorse this change.We restrict our recommendation to claims of discovery of new effects. We do not address the appropriate threshold for confirmatory or contradictory replications of existing claims. We also do not advocate changes to discovery thresholds in fields that have already adopted more stringent standards (e.g., genomics and high-energy physics research; see Potential Objections below).

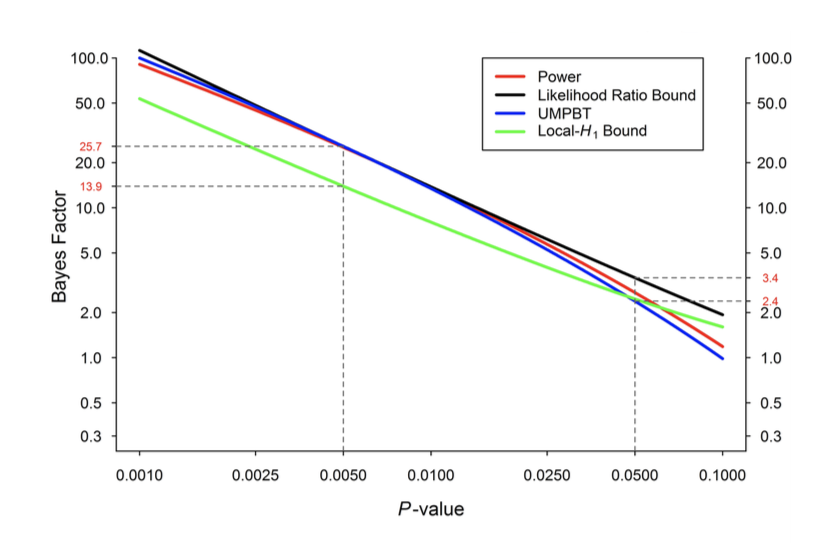

We also restrict our recommendation to studies that conduct null hypothesis significance tests. We have diverse views about how best to improve reproducibility, and many of us believe that other ways of summarizing the data, such as Bayes factors or other posterior summaries based on clearly articulated model assumptions, are preferable to P-values. However, changing the P-value threshold is simple, aligns with the training undertaken by many researchers, and might quickly achieve broad acceptance

There are also a few news stories about this that have some useful other information and summaries. Examples include:

- Tom Chivers in Buzzfeed: These People Are Trying To Fix A Huge Problem In Science

- Dalmeet Singh Chawla in Nature: Big names in statistics want to shake up much-maligned P value

- Kelly Service in Science It will be much harder to call new findings ‘significant’ if this team gets its way

Overall I am not 100% certain whether I support this call but I am leaning towards supporting it. Setting a low bar for statistical significance has clearly created a lot of problems in research. However, created a higher bar but trying to apply it across the board also could create some issues.

Regardless I do think that this issue is very very important and certainly for microbiome studies we need a broader discussion of what thresholds we should use for testing for significance. And I think in many cases the community has allowed to low a bar to be set.

Anyway – this seems to be worth a read and worth having a discussion about it with your colleagues.

2 thoughts on “Should we change the threshold for statistical significance? Many think so ..”